In any strategic undertaking, trusted data is key to making better decisions that unlock new opportunities for your organization. One of the first steps in the process is to access and replicate the data you need to the cloud for robust analytics and reporting.

But the process doesn’t end there. Next, it’s time for the data steward to perform additional clean-up and verification of data in the cloud, build governance policies, and monitor for issues over time.

Reduce errors, save time, and cut costs with a proactive approach

You need to make decisions based on accurate, consistent, and complete data to achieve the best results for your business goals. That’s where the Data Quality service of the Precisely Data Integrity Suite can help.

With advanced algorithms and machine learning techniques that detect and correct errors and inconsistencies, you can maintain the integrity of your data and avoid costly mistakes.

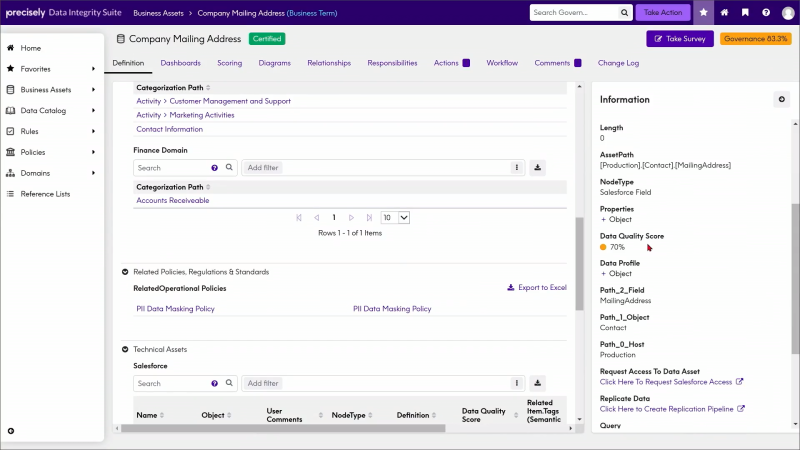

Another key to success here is ensuring data properly aligns with business value, which is accomplished by setting policies that govern the use of data over time.

Once you’ve connected key business goals and initiatives, it’s time to establish policies and procedures, including defining data ownership, establishing access controls, and managing data retention and deletion. All of these steps can be taken in the Data Integrity Suite’s Data Governance service – helping you to better manage and protect data throughout its lifecycle.

2023 Data Integrity Trends & Insights

Results from a Survey of Data and Analytics Professionals

Now, let’s talk about monitoring your data.

Manual spot-checking for changes or errors within a dataset means going out of your way to closely comb through data – whether the data has changed or not. It’s time-consuming and tedious, and even with all that effort, we’re still only human. Changes big and small can often be overlooked, leading to costly downstream issues for the business.

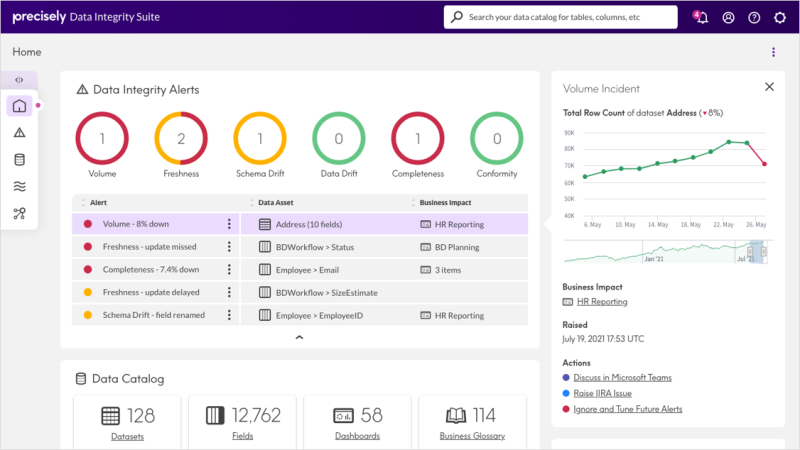

So, when data observers can receive automated anomaly alerts for more proactive issue detection and repair, it’s a game-changer.

Powered by continuous machine learning-based monitoring and proactive alerts, the Data Observability service of the Suite helps you save time, reduce costs, and get a clearer picture of your data health – meaning you build trust in data for analytics and operational use cases faster.

Combining data governance, data quality, and data observability capabilities helps you make more informed decisions based on the most accurate and up-to-date information.

How does it work for real-world use cases?

Let’s continue with a common scenario: your organization needs to expand a product’s reach into a new market.

Once the location and address data needed have been accessed in the data catalog and replicated in the cloud, it’s time to move on to the process we’ve covered above – cleaning, governing, and monitoring that data over time.

Let’s break that down further into three simple steps.

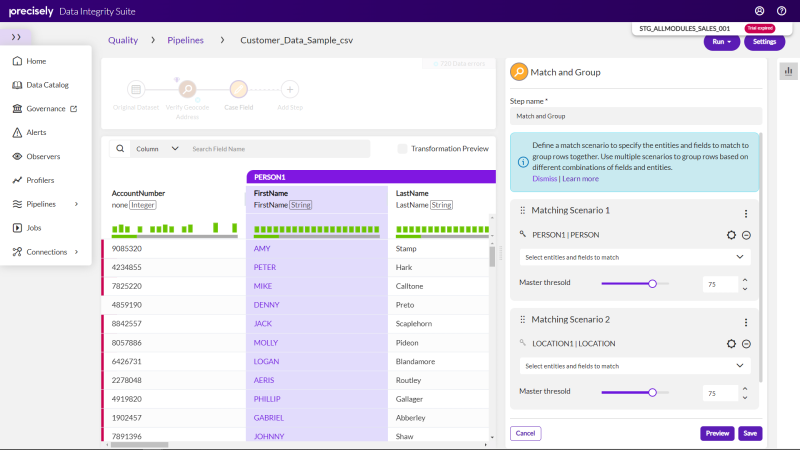

Step 1: Standardize and verify data in the cloud

This first step is critical in any scenario – but particularly for this example, when you consider that location and address data can be some of the most challenging types for organizations to manage. You must ensure the data you base decisions on is accurate, complete, and contextualized.

The Data Quality service helps you accomplish this by validating, geocoding, and enriching data to help ensure the best results based on the most current information.

Step 2: Link data to governance rules and policies

Once the location and address data have been cleaned and verified, you must link those assets to the business goal and establish data governance policies and procedures.

This is all achieved in the Data Governance service, ensuring data is appropriately managed and protected throughout its lifecycle. And critically, that it’s directly aligned with business value.

Step 3: Monitor continuously for issues

With the data cleaned up and governance policies in place, it’s critical to use real-time monitoring to track changes and identify any issues.

Using the Data Observability service, set up alerts so that the primary person responsible for maintaining the data assets can proactively track and fix issues to ensure the best quality information possible.

As alerts come in, the data owner can be more informed by looking at trends over time. Tables can be examined to see alerts – like schema drift and freshness, while detailed charts provide a visual overview of historical trends.

With these three steps, the team responsible for the product expansion can confidently move forward with decision-making grounded in the most accurate, up-to-date data.

Precisely partnered with Drexel University’s LeBow College of Business to survey more than 450 data and analytics professionals worldwide about the state of their data programs. Now, we’re sharing the ground-breaking results in the 2023 Data Integrity Trends and Insights Report.